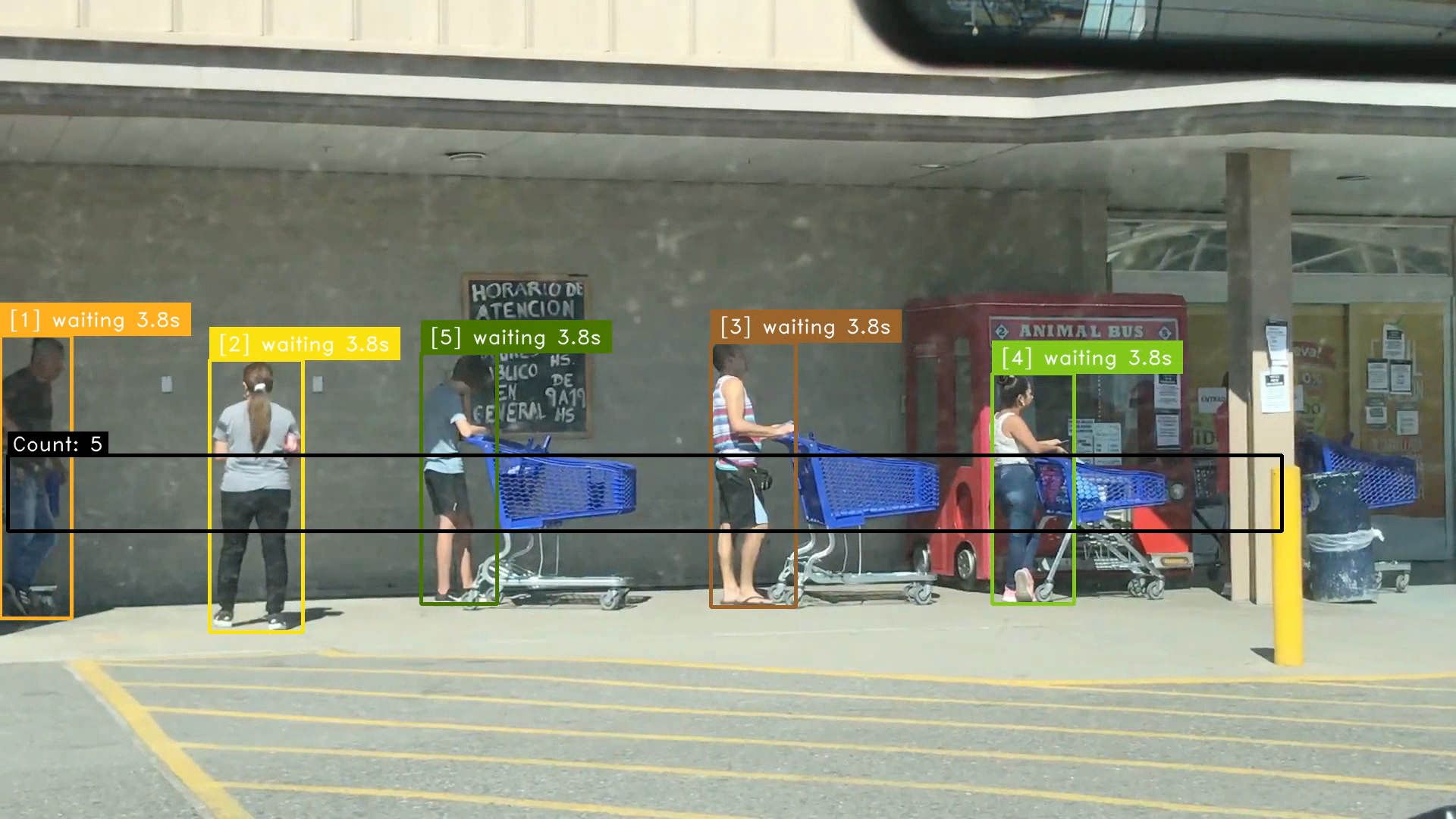

Instance segmentation and tracking

Instance segmentation is an advanced computer vision task that combines object detection with

pixel-level segmentation. It identifies and outlines individual objects within an image or video,

providing detailed masks that delineate the boundaries of each object. This is particularly useful in

applications where precise object boundaries are important, such as in autonomous driving, robotics,

and medical imaging. By providing a richer representation of the visual world, instance segmentation

enables tasks like object counting, tracking, and interaction analysis.

It is useful in cases where you need to measure the size of detected objects, like in drone image

analysis. However, instance segmentation models are typically larger, slower, and less accurate than

object detection models. Moreover, they require more data to train effectively, and the annotation

process is significantly more laborious, with a harder training process. So, it is often better to try

object detection first because it is easier and cheaper to test, and faster and more accurate to run.

Instance segmentation only must be used when the outline of the object is required by the application.

Otherwise, object detection is enough for most cases.

How to train an instance segmentation model with DeepLab

Custom training is the process of fine-tunning a pre-trained model on a specific dataset so it can

recognize objects that are not included in the original training dataset.

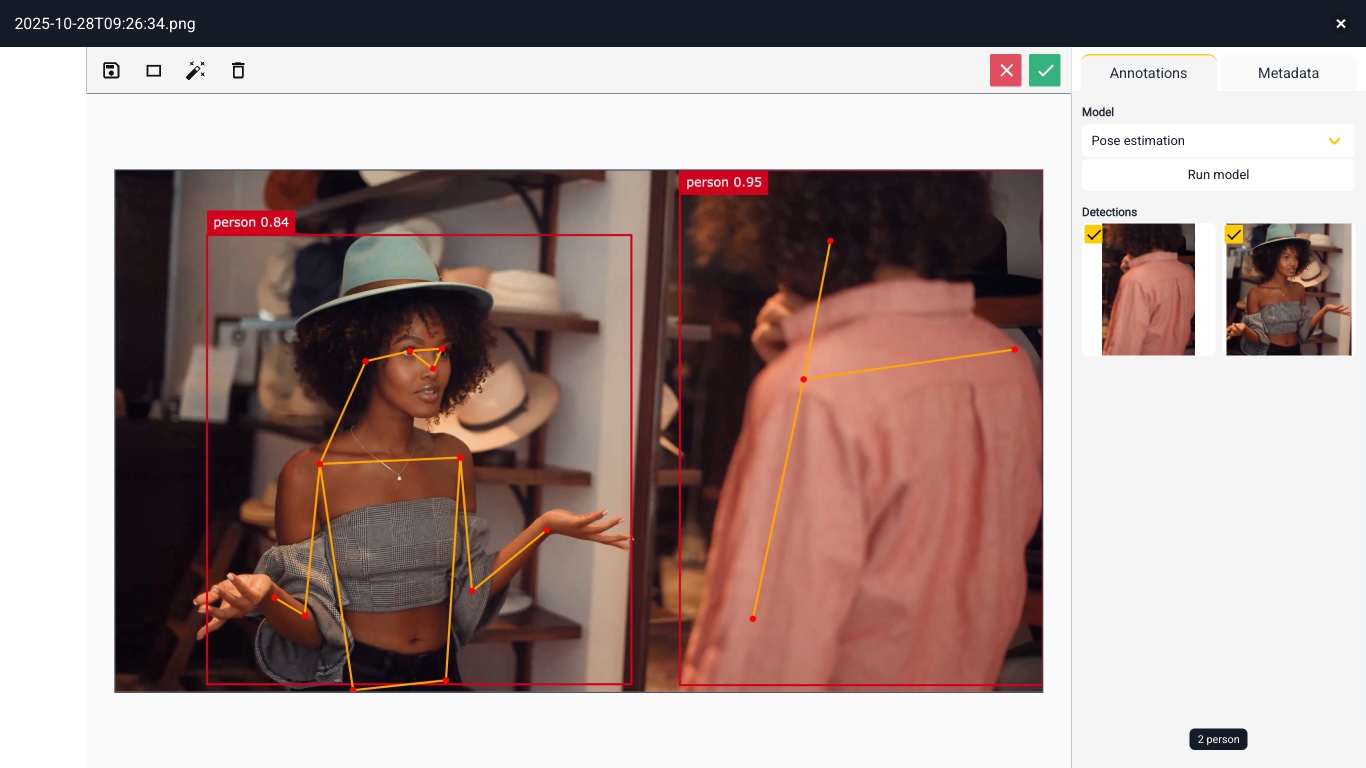

To train an instance segmentation model with DeepLab, you need to follow these steps:

- Prepare your dataset: create a new project folder and upload your images, then use the auto segmentation

tool to annotate the objects using an outline polygon.

- Train the model: once the dataset has been fully annotated, just click "train" to launch the notebook

and start the training process. Just select the dataset folder and start training your model.

- Run the model: once the training is finished, you can run the model on your images or videos using the

DeepLab app. Just select the input file and model and and click "run" to detect and segment the objects in

the input file.

Contact Us